Predicting a (R)IoT: Maybe it doesn’t all need to be processed this instant

At November’s Structure 2017 conference, however, Vinod Khosla said: “Data anti-gravity is a great startup opportunity.”

Khosla was a founder of once high-flying Sun Microsystems and one of the premiere venture capitalists in Silicon Valley, so people tend to pay attention to him. But what’s he talking about?

Is time up for data gravity?

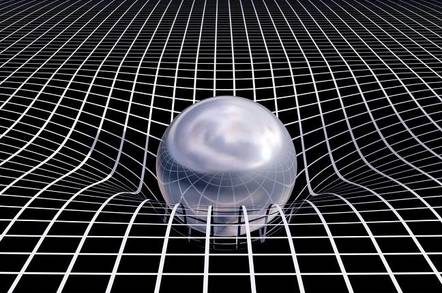

Apps and data are attracted to each other. And the more data there is, the greater the attractive force pulling applications and services to associate with that data. This attraction is partly physical in the sense of network characteristics like latency and throughput. However, it can also be thought of in terms of network effects more broadly; a large and rich collection of information – whether images, data about images, video, purchase data, or just about anything – tends to pull in more and more services that make use of that data.

Industrial IoT, in particular, is primarily about collecting large amounts of data and then doing things with that data. This is less true of most smart home applications. They often seem to be more focused on just replacing perfectly serviceable physical controls with less reliable and less secure versions that need a smartphone app to work. But I digress.

That data is collected by sensors. Simple examples include thermometers, microphones, and accelerometers. These devices are typically simple and cheap and may need to run off a battery. They rarely have much computing power and need to ship data off someplace else so it can actually be put to use.

McCrory has a long list of factors that can affect data gravity. For example, even if you could store every byte that some distributed system collects in a central location, would you necessarily want to? There may be compliance risks with personally identifiable information, especially given the European Union’s General Data Protection Regulation, enforcement of which starts in May.

However, for our purposes here, let’s talk about two factors in particular: network bandwidth and network latency.

Bandwidth is effectively a measure of the capacity of the available network pipe between the machine that’s sending the data and the machine receiving it. It’s hard to generalise how much data is too much to move. It partly relates to costs associated with moving and storing the data such a network charges. It’s also just a function of the limited capacity of low-power wireless networks (such as ZigBee) that sensors may use. However, with machinery such as jet engines collecting hundreds of gigabytes of data per use, it’s not hard to see how shipping all that data back to a central collection point (along with managing it, backing it up, and then using it for something) can get difficult.

We think less about latency measures on a day-to-day basis. As consumers, we’re mostly exposed to it with activities like multiplayer video games. We see something, take an action in response such as pressing a trigger, and then see the effects of that action on the screen. The sequence may not involve much data but, if the sequence takes longer than about a tenth of a second we see it as “lag” and the game becomes increasingly hard to play effectively.

In the case of Industrial IoT systems, latency doesn’t matter if you’re just sending data back to HQ for later analysis. But if sensor data is collected so that a train can be stopped right now in response to an object on the tracks or a mechanical failure, every fraction of a second counts.

What’s the data anti-gravity for these two factors?

The short issue is that there isn’t one in an absolute sense. Capacity of any realistic network is limited relative to the amount of data we can collect even today. And the number of sensors is only going up. At a fundamental level, the speed of light sets a floor on latency. “You cannae change the laws of physics!” as Star Trek‘s Scotty would have put it. (To be pedantic, it’s the speed at which a data packet can travel through the network, which is a bit slower than light but you get the idea.)

However, you can mitigate data gravity.

One way is to essentially redefine the problem. For example, narrow the focus to the gravity that holds data to a particular cloud provider on the backend once it’s been sent there from the edge.

As Khosla put it: “Most of these [data gravity causes] are not critical issues for ‘once a year data anti-gravity’ threat that CIOs need to hold over cloud vendors’ head. They are critical issues for real time multi-cloud operations but solvable.” Make moving data around a leisurely and occasional activity and many problems largely evaporate.

We can also design an IoT system’s architecture to make useful tradeoffs for a particular application. As James Urquhart puts it: “I think it’s more about escape velocity and delta-v than ‘anti-gravity’ per se.” In the case of space travel, we have levers like rocket type, payload weight, and how long it takes to reach our destination. We can’t make trips arbitrarily fast or payloads arbitrarily heavy. The laws of physics again. But we can relax constraints that aren’t important to us to optimise for those that are.

In the case of IoT, one of the most effective approaches is often to stick an additional level of computer between the sensors and the cloud backend. We sometimes see this in home setups in the form of some sort of hub. But in industrial settings, this gateway computer will often be a full-fledged server of some sort. (In practice, large IoT installations will often have many specialised systems and layers of computing and storage but, for our purposes we can mostly think of them logically as three layers: sensors, gateways, and cloud.)

The gateway can then serve two functions to mitigate the effects of data gravity.

To mitigate latency, a gateway can be located physically close to a group of sensors and take any actions that require immediate attention. You’ll never reduce latency to zero but, with enough gateways and an optimised network, you can design an IoT system that responds quickly and doesn’t need to phone home for instructions all the time.

Once its urgent business is out of the way, a gateway can then start mitigating the bandwidth problem. Much of the time that we collect data we really only care about it if it’s not normal in some way. Too hot? We care. Too cold? We care. Just right? Not so much.

We may also care more about trends and averages than the individual data points. Therefore a gateway can filter or aggregate data for later analysis and only send that subset back to the cloud. To be sure, we may never be quite certain whether something we measured is going to be useful some day. However, based on our knowledge about the machinery or environment in question, we can probably make intelligent decisions about what to save and what to toss.

There are ways to reduce the impact of data gravity. What we can’t do is ignore it. Data obeys rules just like mass does. IoT systems and anything else working with large quantities of data need to be designed with that reality in mind. Or they may come crashing to Earth. ®

Gordon Haff is a Red Hat technology evangelist and former IT advisor with Illuminata and Aberdeen analyst.